Reaching Further: Comprehensive Intrinsic Analysis of 56,000+ GitHub Repositories

1. Introduction

In our previous analysis, we used 13,000 Repositories to explore the intrinsic usage across open-source projects. That initial study provided valuable insights into popular intrinsics and common usage combinations—but we knew we had only scratched the surface.

Today, we're excited to present a dramatically expanded analysis covering more than 56,000 GitHub repositories—over four times our original dataset. To mark this milestone, we've released our new section inside simd.info, the Statistics! The Statistics page will be receiving frequent updates, to showcase the changes in intrinsic utilization rates across projects and/or CPU architectures as well.

In this expanded analysis, readers will discover how the intrinsics landscape truly looks at scale, revealing usage patterns that only become visible when examining tens of thousands of codebases across diverse projects and architectures. But how did we achieve this?

2. Overcoming Limitations

Scanning so many repositories is not easy. Without optimizing our scanning tools, none of that would be possible. Our secret recipe was a combination of the famous Vectorscan, a public fork of Intel's Hyperscan, and a fast-compiled language. For our purpose, we chose the upcoming Zig language because it's easy to use, has nice features for its current version, and supports direct C library importing. Those facts made the development process of the program that scanned the repositories seamless.

Vectorscan: The Engine Behind Our Large-Scale Intrinsics Analysis

Vectorscan is one of our very projects, a high-performance regular expression matching library designed for speed and portability across various hardware platforms. It is a fork of Intel’s Hyperscan, which was originally optimized for x86 processors. Vectorscan extends this functionality to include architectures such as Arm's Neon and Power VSX. For our results, we ran our analyzer onto a dual-socket Gigabyte MP72-HB0 with 160 cores and 380GB of RAM! Vectorscan is the fastest regular expression matching library, suitable for searching massive amounts of text, perfect for this particular use case.

Zig: The Language That Powered Our Scanning Tool

Zig is a modern, statically typed, compiled programming language designed for building robust and optimal software. It's a C-like language, offering features like manual memory management, compile-time metaprogramming through comptime, and easy interoperability with C libraries via @cImport. These characteristics made Zig an excellent choice for developing our high-performance scanning tool, enabling efficient and maintainable code across diverse platforms.

Vexu/arocc: The Tokenizer That Enhanced Our Intrinsic Pair and Triplet Analysis

To deepen our analysis of intrinsic usage patterns, particularly in identifying pairs and triplets, we integrated the tokenizer from the arocc project, a C compiler written in Zig. Arocc is designed to provide fast compilation and low memory usage with good diagnostics. Its tokenizer efficiently processes C source code, enabling us to accurately parse and analyze complex intrinsic combinations across a vast number of repositories. By leveraging arocc's tokenizer, we enhanced the precision of our pattern detection, uncovering nuanced optimization opportunities within the codebases we examined.

Putting the Pieces Together

With these tools in our arsenal, we created a multi-threaded repository scanner that divided the workload evenly across threads. Each thread was assigned the responsibility of scanning a subset of the repositories, ensuring balanced work distribution with one thread per repository processing at any given time. This approach maximized throughput while maintaining thread independence, keeping the inter-thread communication overhead minimal.

3. Our Findings

Now, let's proceed with our findings after scanning a big chunk of GitHub's open-source projects.

3.1 General Repository Analysis

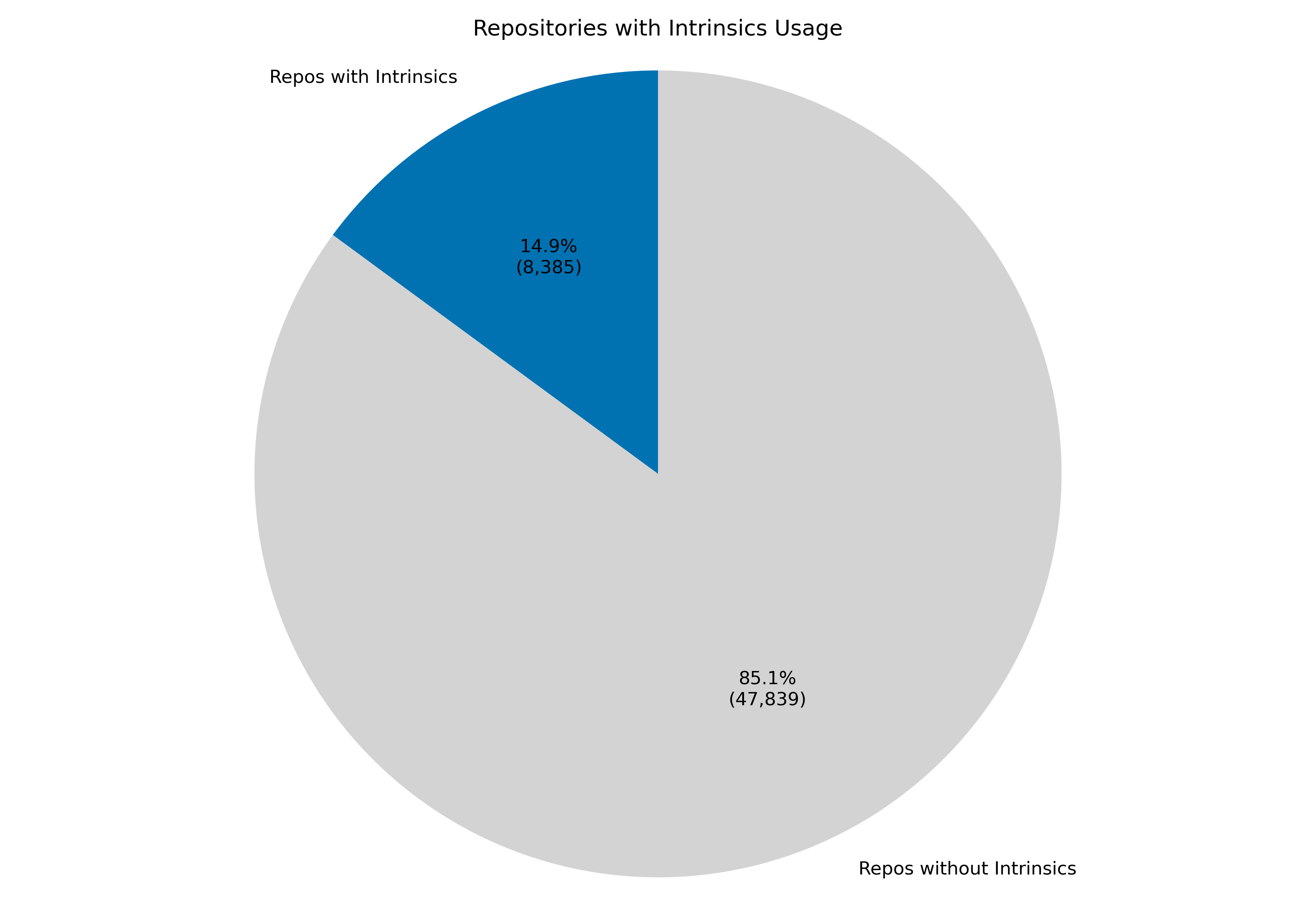

Our analysis of 56,224 GitHub repositories revealed interesting patterns in intrinsics usage across open-source projects. As shown in Figure 1, only 14.9% (8,385) of repositories utilize intrinsics in their codebase, while the majority (85.1% - 47,839) do not employ these specialized CPU instructions. This relatively low utilization rate highlights that despite the performance benefits intrinsics can provide, they remain a specialized optimization technique used by a minority of projects.

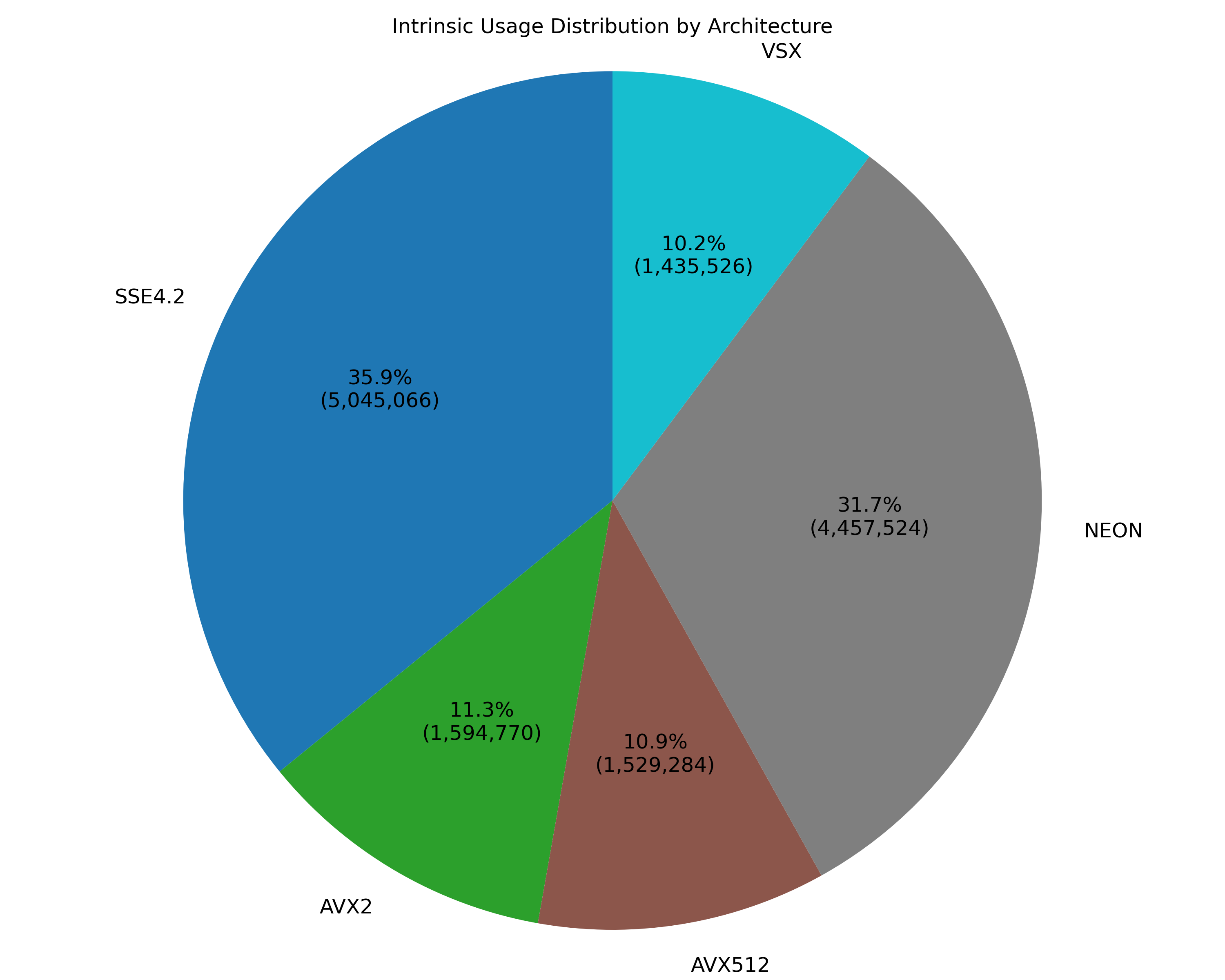

When examining the actual distribution of intrinsic occurrences by architecture (Figure 2), we discovered, as expected, that the majority are from Intel's SSE4.2 with 35.9% (5,045,066) of all intrinsic calls. Close enough but in second place, is Arm's Neon intrinsics, accounting for a substantial 31.7% (4,457,524) of total calls. This high percentage for NEON is noteworthy as it indicates the growing importance of ARM optimization in modern software development, likely driven by the mobile industry and the recent trend towards ARM-powered computers.

Intel's AVX2 and AVX512 instruction sets represent 11.3% (1,594,770) and 10.9% (1,529,284) of intrinsic calls respectively, while IBM Power's VSX instructions are at 10.2% (1,435,526). The relative parity between these three instruction sets is notable, indicating that while they are used in fewer repositories overall, they are implemented with comparable frequency within the codebases that utilize them.

3.2 Multi-architecture Configurations

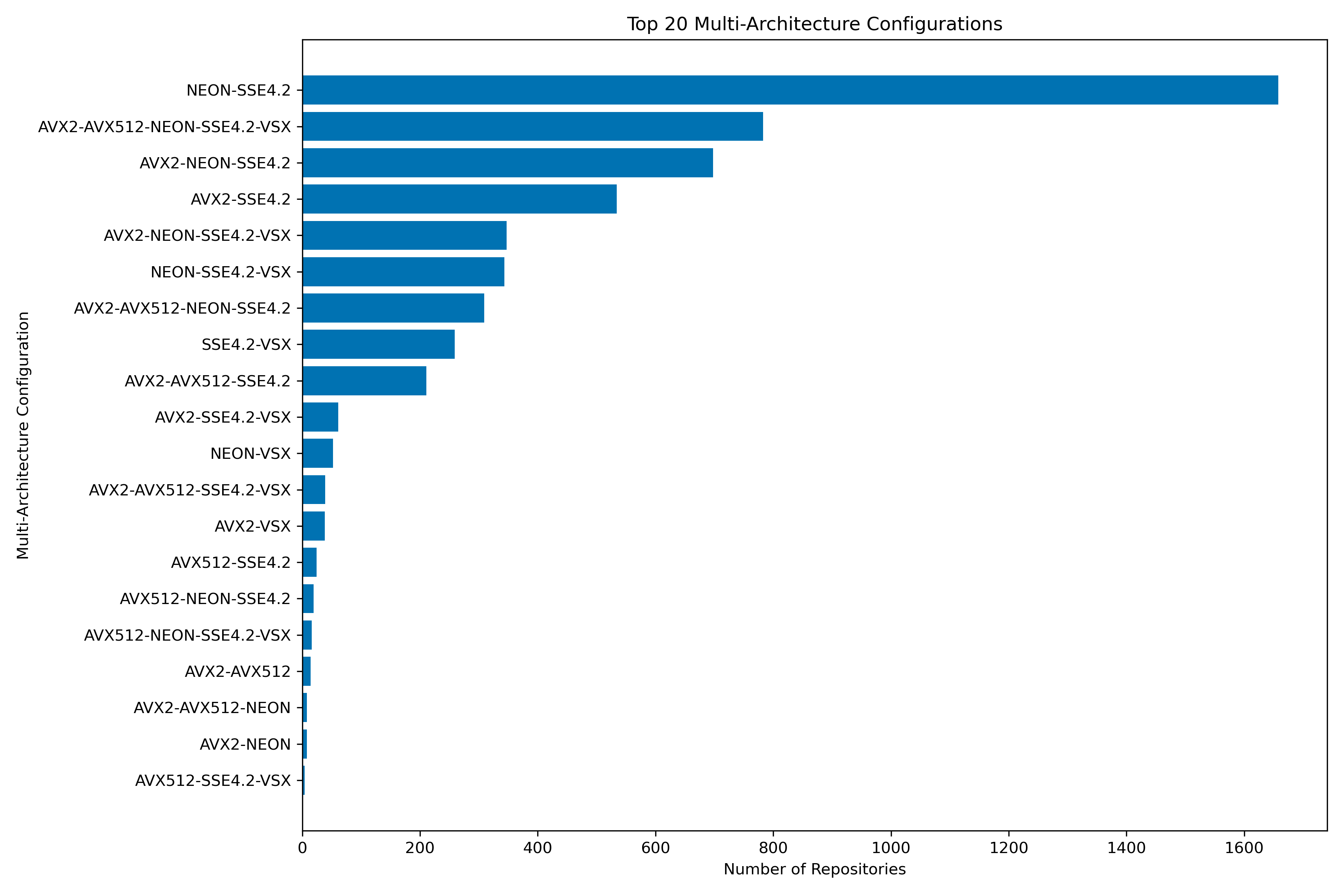

The intrinsic usage distribution alone doesn't tell the whole story. A good portion of the "intrinsic positive" repositories use intrinsics from 2 or more intrinsic families, showing the importance of supporting multiple CPU architectures.

The most notable multi-architecture configuration is the Neon-SSE4.2, indicating that most projects support both Intel and Arm platforms, which together consist of the majority of the world's CPU market, with Intel dominating in desktop computers and servers, while Arm processors power most mobile devices and an increasing share of energy-efficient computing solutions.

3.3 Top Intrinsics

3.3.1 Single Intrinsics

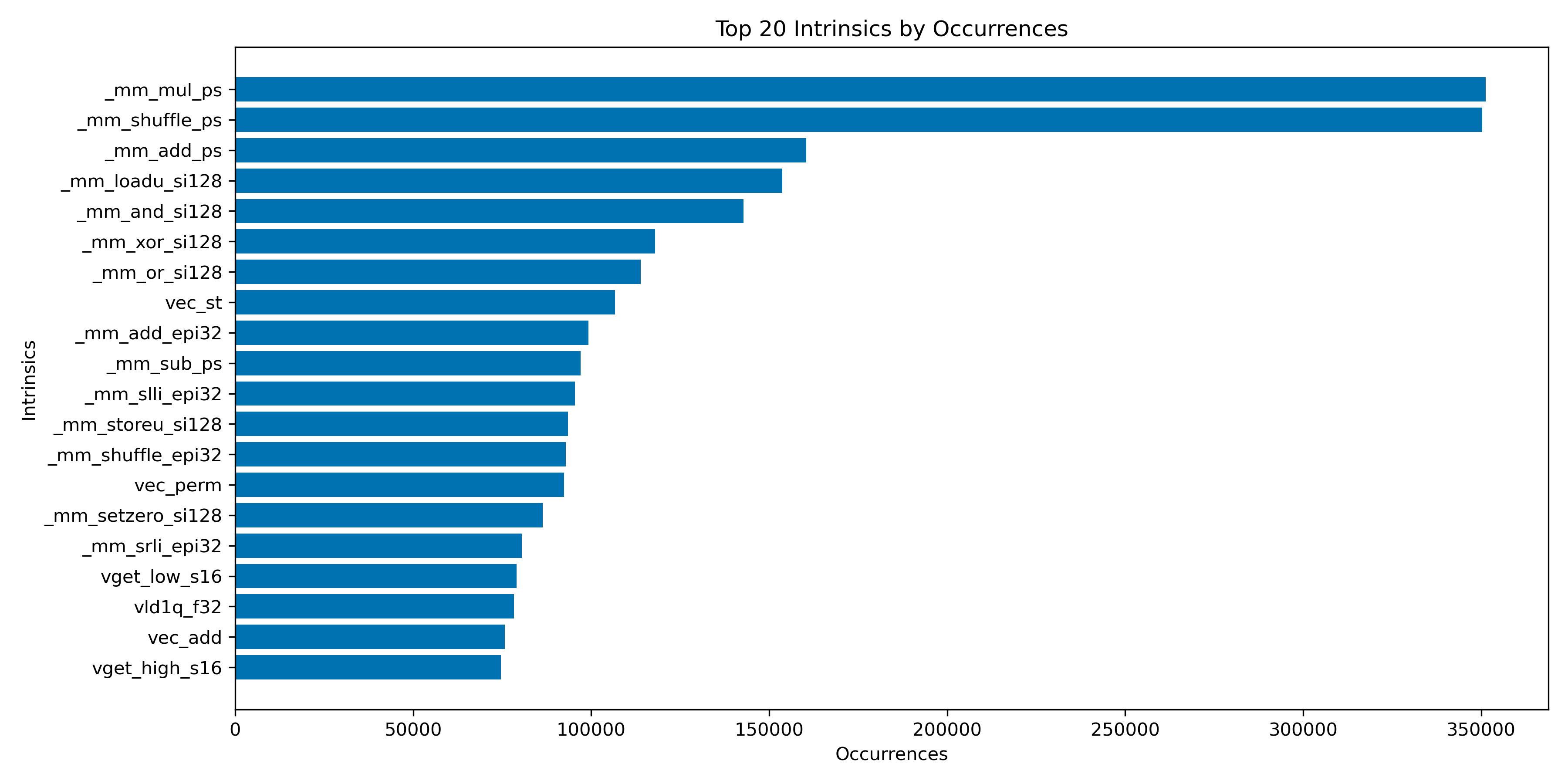

In our statistics section, we cover the top intrinsics for every architecture. In the plot below, we show the top 20 intrinsics in general. As you can see, the load, store, add, and multiplication operations are the most used, with the SSE4.2 "_mm_mul_ps" and "_mm_shuffle_ps" intrinsics reaching 350 thousand occurrences. For specific top intrinsics in every architecture, feel free to visit our statistics section.

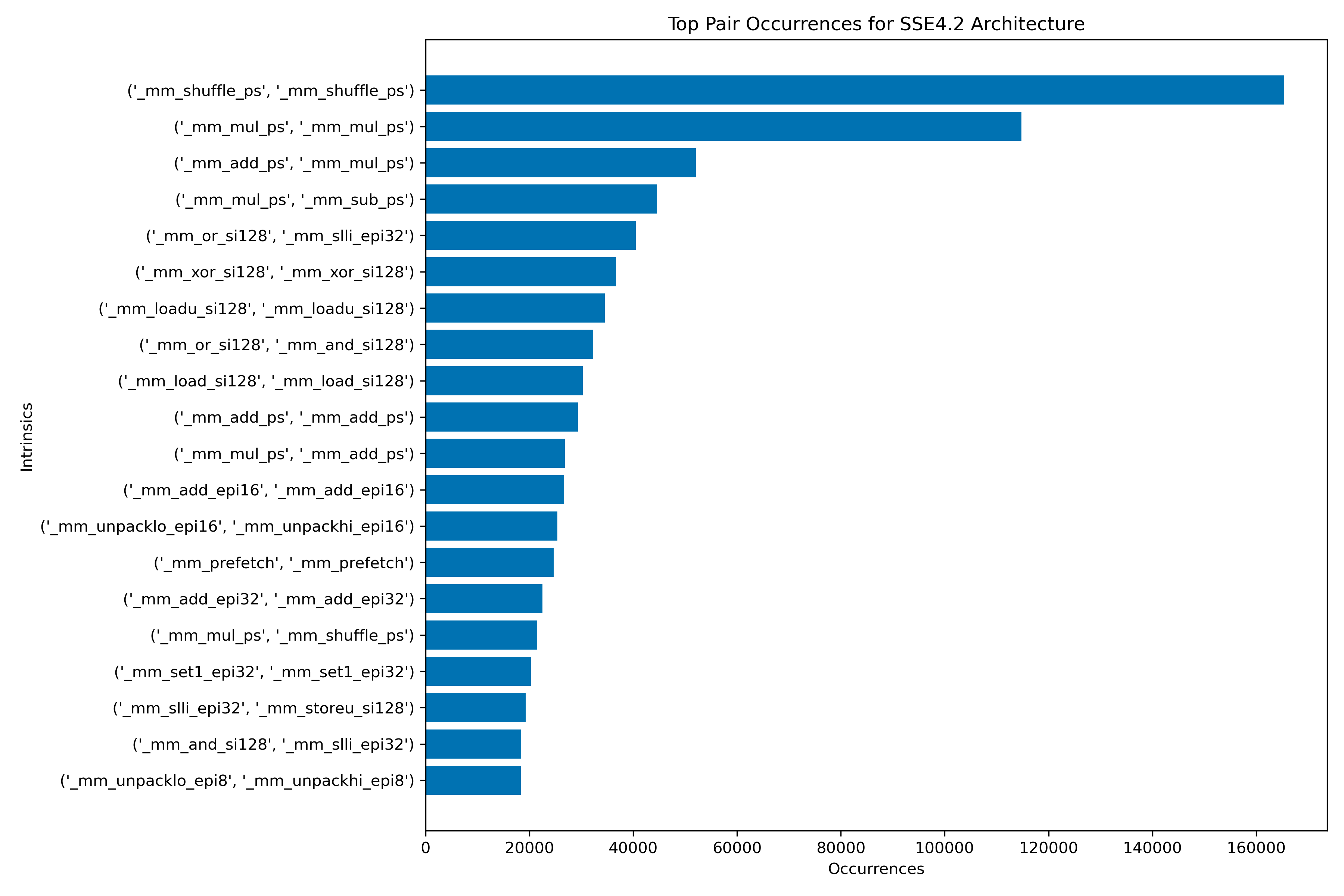

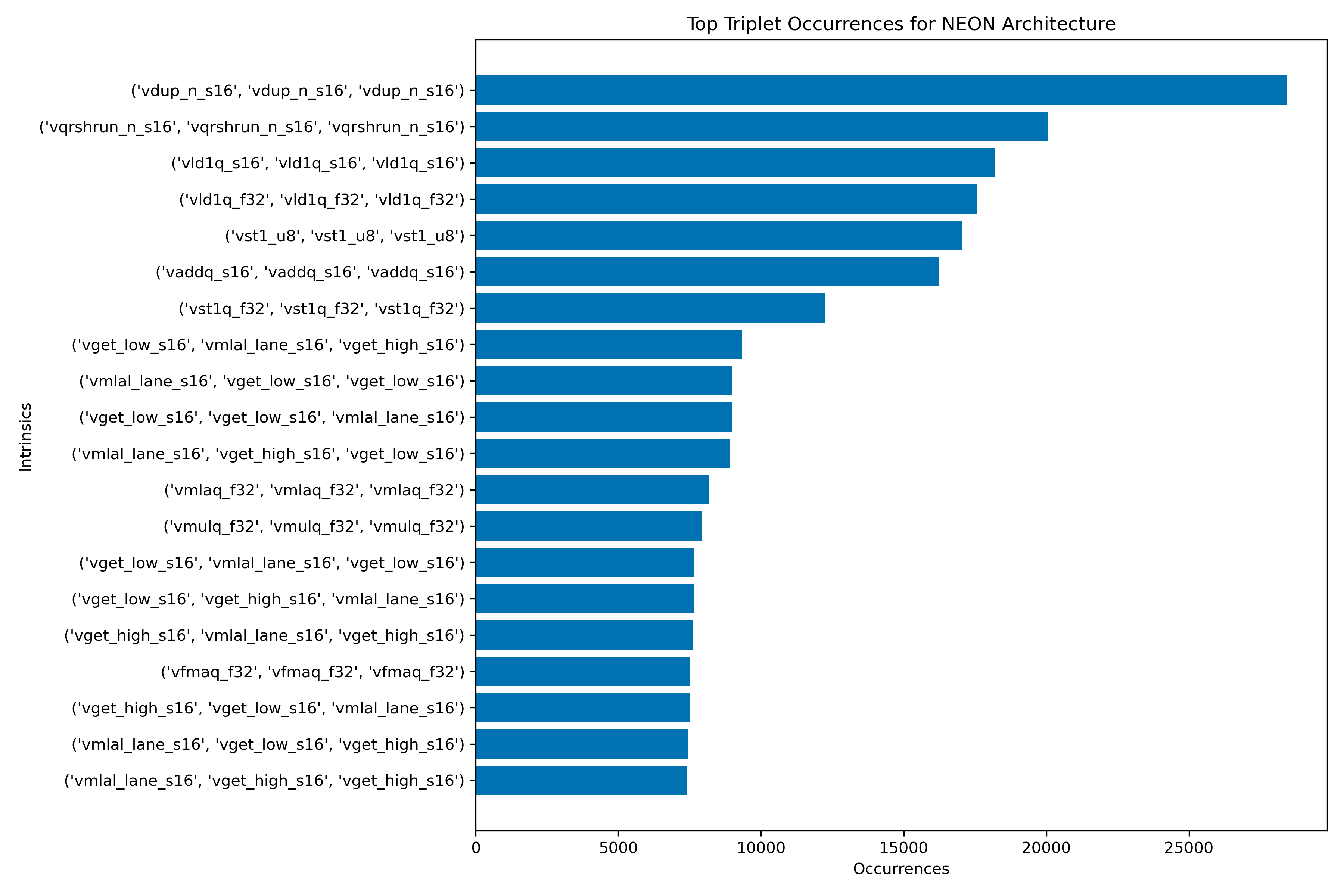

3.3.2 Pairs and Triplets

An interesting pattern when using intrinsics in development is when a group of 2 and/or 3 intrinsics are seen together, either nested or one below the other. With the help of the tokenizer, we were able to find those frequent pairs and triplets and they are posted right now on the statistics.

3.4 Least Used Intrinsics

As in the last blog, we covered again the least used intrinsics, with increasing threshold to <100 occurrences.

| Architecture | Lower matches than 100 (0.001%) |

|---|---|

| Intel SSE4.2 | 0 |

| Intel AVX2(includes AVX) | 7 |

| Intel AVX512 | 907 |

| Arm Neon | 91 |

| Power VSX | 7 |

AVX512 has the most least used intrinsics of all architectures (907 out of 4955, approx. 18%), and Neon comes second with 91 in total. Also, an interesting finding is the number of occurrences of some intrinsics on the same architecture. We found out that a lot of them have identical occurrence counts, which means that there's a high chance that these intrinsics are used only by 1 project. These findings reflect that:

- The intrinsics are doing a very specific task,

- The programming community does not have enough knowledge about them,

- There is a lack of official documentation.

In the statistics section of simd.info, you can further check out the least used intrinsics.

4. Conclusion

So what did we learn from all this? Looking at over 56,000 GitHub repos gave us a much clearer picture of how developers are actually using intrinsics in the real world.

Only about 15% of projects use intrinsics at all, which shows they're still kind of a specialized tool. When projects do use them, Intel's SSE4.2 leads the pack, but Arm's Neon is right behind it, showing how important mobile and energy-efficient computing has become.

The fact that so many projects support both Intel and ARM confirms what we already knew, that these two architectures dominate the CPU world, with Intel powering most desktops and servers while Arm rules mobile devices and is the fastest-growing server player in the datacenter market as it offers great performance and power efficiency.

We also found some interesting patterns in how intrinsics are used together, which could help developers optimize their code better. And we spotted that AVX512 has a ton of intrinsics that almost nobody uses, either because they're too specialized, poorly documented, or developers don't know about them yet.

Frequent updates on simd.info will keep tracking these trends, so check back to see how things change over time!

SIMD Intrinsics Summary

| SIMD Engines: | 6 |

| C Intrinsics: | 10444 |

| NEON: | 4353 |

| AVX2: | 405 |

| AVX512: | 4717 |

| SSE4.2: | 598 |

| VSX: | 192 |

| IBM-Z: | 179 |

Recent Updates

November 2025- LLVM-MCA Metrics: Added latency and throughput data for each intrinsic on a per-CPU basis, plus overall plots for visual analysis.

- IBM-Z SIMD Integration: New SIMD architecture support integrated, including 179 intrinsics.

- Search Engine Migration: Switched from Elasticsearch to Meilisearch — 16× less memory usage, 100× faster responses, and improved search quality.

- Updated Statistics: Scanning expanded to more than 59k repositories, now also including IBM-Z statistics.

Previous Updates

- Intrinsics Organization: Ongoing restructuring of uncategorized intrinsics for improved accessibility.

- Enhanced Filtering: New advanced filters added to the intrinsics tree for more precise results.

- Search Validation: Improved empty search handling with better user feedback.

- Changelog Display: Recent changes now visible to users for better transparency.

- New Blog Post: "Best Practices & API Integration" guide added to the blogs section.

- Dark Theme: Added support for dark theme for improved accessibility and user experience.